Pruning Your eCommerce Site: How & Why

Posted by Everett

If there has been one “SEO tactic” that we’ve seen work consistently throughout 2015, it’s the idea of pruning underperforming content out of Google’s index.

Sometimes it is a result of outdated SEO tactics like article spinning, or technical issues such as indexable internal search results or endlessly crawlable faceted navigation. Other times there are thousands of products with little or no content, or manufacturer-supplied product descriptions. This is why it’s important to make distinctions between pruning off the site (i.e. removing) and pruning from Google’s index (e.g. a “Robots Noindex” meta tag).

In order to find these opportunities, it helps to first perform a Content Audit. This is not a how-to guide for doing content audits. For step-by-step instructions, refer to this tutorial here on Moz.

But there are some differences between auditing eCommerce content compared to other types of content, like blogs or resource sections. For example, when we use the phrase “eCommerce Content Audit,” we’re limiting the analysis to “catalog” content (i.e. Home, Categories, Products, and a few others). More content auditing tips specific to eCommerce websites can be found in the resources section at the end, and in this blog post.

Again, this isn’t a guide for doing content audits. Think of it more as a guide to pruning eCommerce catalog pages, which we find is the most important outcome of many such projects.

TABLE OF CONTENTS:

Why you should consider pruning your eCommerce site

Because our sites look like this:

The real reason you should prune your eCommerce site

Why you should consider pruning your eCommerce site

There are two specific case studies below, which do a pretty good job of answering this question. But, if you don’t mind, allow me to draw a few parallels first.

Pruning is something that occurs naturally in a variety of ways, from dead limbs and autumn leaves to the development of our adolescent brains. Without pruning, systems tend to get bloated and dysfunctional. That’s why if you don’t take the time to maintain your indexable content inventory by pruning it, Google will do it for you — sometimes at a great cost to overall traffic and revenue for the entire site.

Synaptic pruning = (Use it or lose it)

Even the human brain prunes itself. This plasticity is one of the reasons we dominate the planet. We’re adaptable. We grow new connections when we need to, and prune the rest as time goes on. This is probably the biological origin of the phrase “Use it or lose it.”

At different times in our lives (6 months to 2 years old) we need to soak up as much information as we can. But most of the time we need to focus on what’s important to each of us and let certain things, like riding a skateboard and 80% of what we learned in high school, go by the wayside. You can’t keep scaling up forever. At some point, there needs to be a scaling down.

When it comes to nerve cells making and losing connections based on how often they are accessed, “Use it or lose it” describes the situation perfectly.

In terms of eCommerce content, “use it” applies to your visitors. If users are not visiting, linking, sharing, or buying that product, you might consider losing it.

Tree pruning = (Remove it to improve others)

Not unlike removing deadweight content from your site, pruning trees involves the careful selection of limbs to remove for the purpose of improving air circulation (crawling) and consolidating light and nutrients (page-level metrics) into the most important branches (pages).

For centuries, people have removed inward-facing, crossed, broken, sick, unwanted, etc. branches in order to improve the health of important trees — or at least limit their impact on tree health when harvesting firewood.

So, back to why we should prune our sites of unhealthy content…

Because our sites look like this:

Think of these broken and crossed limbs as the types of thin, duplicate, and low-quality content you’ll find on most enterprise eCommerce sites these days. Or any site, really.

Remember back when you could customize internal search result pages to make them look like landing pages (which you should do anyway) instead of boring search results? And then you could mine the internal search logs for keywords with more than one search to automatically “publish” them simply by giving Google a link (which you should definitely not do)? Or how about “article spinning,” remember that?

Then you probably remember this from around February 2011:

Even simple “white hat” tactics like writing halfway decent content for lots of keyword variations has started to become less effective, and potentially harmful. You don’t need separate pages on your site for “choosing a blue widget,” “how to choose a blue widget” and “choosing blue widgets,” and Google definitely doesn’t need them in their index.

URL pruning = (Improve it or remove it)

When it comes to low-quality content dragging down the rankings of your entire site, “Improve it or remove it” makes the most sense. Removing may involve deleting, redirecting, 404/410 codes, “Robots Noindex” meta tags and other options, depending on the situation. Some of this will be discussed later, but first…

The real reason you should prune your eCommerce site

Assuming you have A LOT more “catalog URLs” indexed than you have categories and SKUs (very common), pruning the site will most likely increase your revenue for a comparatively small investment.

What if I told you this might be the best SEO ROI most large sites could hope to get in 2016?

Case studies

The following two case studies involve real clients for whom Inflow has performed eCommerce Content Audits, including implementation support.

Auto Body Toolmart (Large-scale pruning with a hatchet)

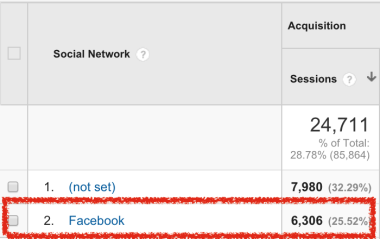

The client, Auto Body Toolmart, had 17,057 pages indexed by Google, according to the Index Status report in Google Search Console. However, the Sitemaps report was showing that Google had only indexed 6,135 of the 25,000 URLs in the XML sitemap. What’s wrong with that picture?

Fewer than a quarter of the pages they wanted Google to know about were indexed. Most of the time this is an architecture issue, like using Javascript frameworks (e.g. angular.js, react.js) without providing a crawl-path to paginated pages. Or like inadvertently blocking directories in the robots.txt file that are important crawl paths, instead of with a <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> meta tag.

And yet, nearly 11,000 URLs were indexed that probably shouldn’t have been.

Most of the time something like this comes down to a technical issue, like non-canonicalized sorting and filtering page URLs being indexed. Upon further inspection, we also correlated major traffic drops with early iterations of Google’s Panda and Penguin updates.

According to our Content Audit Strategies Tool, Auto Body Toolmart falls squarely into the bottom-right corner (extra large site with an existing content penalty).

Clicking on “Focus: Content Audit with an eye to Prune” reveals a more detailed prescription:

“Often, we are unable to bring content quality up to par at this scale. Figuring out what can be improved and removing the rest is key. Get the amount of pages indexed down drastically to improve the ratio of good content pages to poor content pages without having to write thousands of pages of copy. Consider removing or noindexing entire sections of the site, or certain page-types, if they would be considered low-quality, thin, duplicate, overlapping, irrelevant…”

From this starting point, Dan Kern and Tim Hampton (Inflow strategists) were able to move forward in the right direction with what limited information they had, while collecting more information to customize the strategy for this particular client.

The gist of their strategy was this: Prune it down heavily, and build it back up as pages are improved (starting with a prioritized group of 1,300 products).

The store had about 20,000 SKUs. Most of them weren’t getting any traffic because they had thin (one or two short bullet points) or duplicate (manufacturer supplied) product copy.

Dan recommended a lot of <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> meta tags on product pages — 11,000 of them, in fact.

Imagine being this client and taking our word that removing more than half of the site from search engine indexes is going to somehow increase revenue.

As you’ll see, they made the right decision.

A major copywriting project is underway in which we are working with the client to get the top 1,300 of those product pages rewritten — all prioritized and managed via their eCommerce content audit dashboard. This will fix duplicate, thin, and other low-quality content at the rate of about 100 product and/or category pages per month.

Post-pruning results

There was a 31% increase in organic traffic with a 28% increase in revenue (despite 11,000 fewer pages indexed) before one word of copy was improved. The only thing that had been implemented was pruning via <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> and a small disavow file (annotated below).

Organic search before & after

About 11,000 total URLs were removed from the index, yet overall traffic began to increase.

Seasonality would not account for this lift, and YOY traffic was up almost 38%.

Sessions increased by about 31% during the same time period.

We kept all of these products in the catalog so as not to lose any selection from a user-experience perspective. Just because a product page doesn’t rank well, doesn’t have any referral or search traffic, and doesn’t have any links does not mean it won’t sell.

Our end goal is to improve the page and remove the “Robots Noindex” tag so it can eventually be found through organic search once it provides a better user experience.

We’re in the midst of the copywriting project at the moment, and expect fantastic results by rewriting about 100 pages per month (as per client’s budget). We’ll let you know what happens. The long term revenue increase from an efficient and affordable pruning of 11,000 products from search engine indexes will more than pay for the copywriting of the 1,300 products Dan marked as “Improve” in the content audit.

There are also nearly 700 category pages that have been marked as “Improve” because they were identified as needing better titles, descriptions, and on-page content. These pages have NOT been removed from the index, and we are working on them in weekly batches. One of the biggest things we will do for categories is to add unique content and optimize those that don’t have intro descriptions.

TL;DR – Auto Body Toolmart

By noindexing pages with practically zero organic search traffic to begin with, we effectively and efficiently (read: hatchet, not scalpel) pruned Google’s indexation of the site.

However, by keeping the pages on the website and findable via internal search and navigation, we preserved the user experience and direct/referral revenue. Allowing search engines to discover and “follow” the URL is also hugely important for crawlability of the entire site, and will ensure faster indexation/ranking of the page once the content has been improved and the page is released back into search engine indexes.

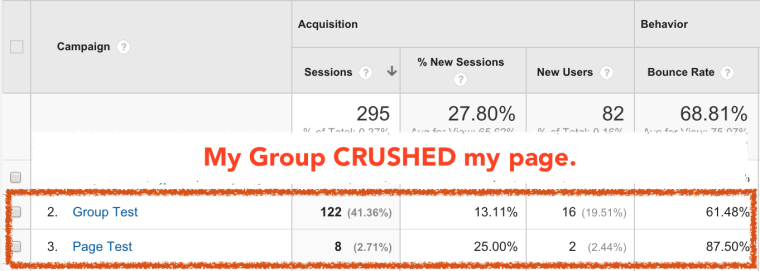

America’s Best House Plans (Content audit combined with link cleanup)

This eCommerce site sells ready-designed house plans direct to consumers. They came aboard after a sharp decline in revenue that was suspected to be linked to the Google Panda and Penguin Algorithm updates.

Tim Hampton started by performing a complete audit to uncover the roots of the problem, and found issues with detrimental backlinks and a high percentage of non-performing catalog pages.

Next, a content audit was performed, which resulted in pruning close to 80% of their catalog pages from search engine indexes.

Post-pruning results

After what seemed like a long wait for Google to release updates and reindex the site, a large upswing in traffic and revenue took place.

This resulted in a 434% increase in revenue from organic traffic YOY. Organic traffic improved 78.48% YOY as well.

The downtick at the end has to do with the selected dates. As you can see, both year-lines dip.

Organic search revenue

After a major jump in May and June, YOY organic search revenue settles back into the forecasted goal range for the month of July.

Notation icon indicates the pruning date.

TL;DR – America’s Best House Plans

By temporarily pruning out 80% of the catalog from search engine indexes and cleaning up their link profile, we saw an impressive multi-month lift in traffic and revenue from search followed by a course on-par with last year — despite having fewer indexed pages.

Moving forward

We will be improving product pages as quickly as possible and re-introducing them into the index in batches. We expect steady improvement over the coming months.

Scalpel work

The examples above deal mostly with large-scale content audits in which our “weapon of choice” is a hatchet. This tends to give the most noticeable results worthy of using in case studies.

Most of you probably already have scalpel examples of your own.

You have probably taken a small group of average and/or low-quality pages and consolidated them into one awesome page. Did you lose traffic because you had fewer pages indexed, or did traffic to the much better page outpace that total within the first couple of months? Please share your results in the comments below.

Now that we’ve made the case for pruning, let’s have a look at the different types of pruning options available to us.

Pruning options

Pruning isn’t necessarily synonymous with deleting. There are several different ways to prune an eCommerce website, depending on the specific situation.

Temporarily pruning from the index while leaving in the catalog

You can “temporarily” prune pages out of the index, while leaving them on the site, using the <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> meta tag. This is a good solution for very large sites with a product page copywriting project that is going to take several months to complete.

Example: A product page with manufacturer-supplied content that needs to be rewritten.

Temporarily pruning from the index as well as the catalog

This would be the same implementation as above, while also removing the product from the site’s navigation and internal search results. This way, direct links will continue to lead to the page and Google will continue to crawl the URL (probably less frequently the longer it stays non-indexable), but user experience and SEO best practices are both maintained.

Example: A long-term out-of-stock product page that will return next season, or as soon as you replace that unreliable, expensive vendor who always screws up the drop-shipping.

Permanently pruning from the index while leaving in the catalog

This one could be implemented in different ways, depending on the situation. You don’t want these URLs indexed by Google, but you may or may not want them to get crawled and “followed.” These pages typically serve a purpose, and so user experience (i.e. conversion rate) would suffer if they were to be removed from the site completely.

Example 1: Deep-faceted navigation URLs with multiple parameters.

At a certain point, even “crawling” needs to be cut off (thus, rel=canonical doesn’t do the trick) or spiders could continue creating new URL strings for who knows how long. Apply a “Robots, Noindex” meta tag and wait for them to be recrawled. Once they’re no longer indexed, add a “Disallow:” statement to the Robots.txt file.

Example 2: See Example 1 under “Consolidating two or more pages” below.

Sometimes you need a Blue Widget product page and a Green Widget product page because that provides the highest conversion rate from category pages in which the visitor can see all color options at once. You can either rewrite the product copy to make each of them completely unique, or you can “Rel = Canonical” to one of the color options from all of the others. After a certain scale, the latter becomes the most likely option.

Permanently pruning from the index as well as the catalog

One solution if you don’t want URLs indexed or accessed by search engines or shoppers would be to simply delete them. We do a lot more of that with blog content than we do with catalog content, such as product and category pages.

Simply delete the page and allow it to 404 or 410 if you want the URL out of the index quickly and it doesn’t have any traffic, links, sales, or purpose.

Example 1: A discontinued product page URL with no external links.

Example 2: Deep category pages showing zero products (stub pages).

Another solution is to put them behind a password-protected wall. And a far less drastic, often more useful solution is to consolidate the pages.

Consolidating two or more pages

It could be as easy as deleting the file and redirecting it to another, which would permanently remove it from both the catalog and Google’s index while consolidating traffic and page authority into the other page.

Example 1: Any time you redirect one URL to another, you are consolidating pages.

Acme Widget 1.0 is a discontinued product. Its product page URL has several high-quality external links because it was the first of its kind. This URL gets redirected to the next generation of that product line, Acme Widget 2.0, with big “New and Improved” red lettering on the page.

Example 2: Any time you use a “rel=canonical” tag with a URL other than the one in the address bar when you visit the page, you are consolidating.

Using a “rel=canonical” tag to indicate that /Mens/Accessories/Ties/ and /Accessories/Mens/Ties/ are essentially the same page (in this case, leaving both in the catalog, though there are certainly other options).

Example 3: Product variants may or may not need their own landing page. It all depends on the situation.

Combining Big Shiny Blue Widget 2.0 and Big Shiny Red Widget 2.0 via product variant dropdowns in cases where “colors” are not commonly used in searches for that product. This may or may not include any redirects.

Example 4: Often it is best to combine the content in a useful, seamless way so several average pages become one strong page.

That SEO company you hired back in 2011 put up category-style landing pages (Curated collections? Buying guides?) for every conceivable long-tail variation of product-related keyword searches. They’re indexed and in the sitemap, but your visitors don’t have any real way of getting to them via the navigation (and that’s a good thing). Some of the content is worth saving, but you don’t need ten “category” pages about exhaust manifolds. A good solution would be to take stock of the different topics and group the pages that way. Then, choose the best-performing page from each topic set, and redirect all of the others to that one after scavenging any great content they might have had for the best-performing page.

What to prune in an eCommerce content audit

Once you have made a complete inventory of all indexable catalog URLs and have settled on a general strategy of pruning out a good chunk of the site from the search indexes (at least temporarily until pages can be improved), it’s time to make some important decisions. But first, here are some things to look out for:

Thin content

Thin content comes in many forms. Most of the time the page serves a purpose on the site, which means we can’t just delete it. However, most of the time it also doesn’t have any business in the search results until the page is improved.

Example 1: Product page on which “Made in the USA” is the only description

Prune via: <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> meta tag until useful content has been added.

Some pages do serve a purpose in the search results, even if they have “thin” content.

Example 2: Top-level category page with no static content

Do NOT prune. Just improve as soon as possible with helpful content.

Duplicate content

This can exist on the same domain, or on other websites. It is a very common problem at very large scales with enterprise eCommerce websites. And if you’re dealing with big drop-shipping plays (auto parts, SWAG…) #fuggedaboutit.

Example 1: Product page with manufacturer copy duplicated on other websites

Prune via <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> meta tag until useful, unique content has been rewritten.

- Write unique content for each SKU on its own URL, or…

- Consolidate product variants onto one page with a drop-down selector, and then…

- Redirect or “rel=canonical” the rest to the new page

- Only show the static content on the first page, and…

- Prune via <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> meta tag to paginated URLs

Underperforming content

This could include a variety of situations. Generally speaking, we look at the following areas when making judgements about whether to improve and/or remove a page:

- No links

- No shares

- No traffic

- No sales

Discontinued and long-term out-of-stock products

If it has been discontinued, or is out of stock for months at a time, consider removing these pages from the index. While it is tempting to want to hold on to traffic going into discontinued products, a better user experience would be to redirect that URL to the next generation product (or the closest category page) so a better page will start to rank for better keywords instead of landing unsatisfied searchers on an out-of-stock product page. If the out-of-stock product page also lacks any links, direct or referral traffic, it may be more efficient to remove it completely and show a 404 or 410 status code.

Indexable search results

I recommend removing these from the index, and then blocking them in the robots.txt file, as recommended by Google here and here. However, we did have one client that was getting so much traffic to these results, it was difficult to make the case for pruning them on the spot. This is just another case of Google making liars of us.

Obviously, every situation is different and one-size-fits-all advice usually turns out to be too general for some. The above recommendations are provided as general examples only.

Packaged resources

We’ve put together a few resources that will help you break this project down into bite-sized chunks. They’re packaged into a single folder called the eCommerce Content Audit Toolkit. Here’s what it comes with:

Content audit template with automated strategies by URL

This Google Spreadsheet can be thought of as a content audit template with training wheels. Unlike the template I shared in the Content Audit Tutorial, which has 8 tabs and no strategy automation, the template above only has two tabs, one of which isn’t even actively used.

The idea is that you simply import the site crawl and URL metrics, and nearly everything else is done for you.

Experienced content auditing pros will probably want to use the original file, but feedback from other marketers indicated a need for a stripped-down version with strategy automation features. Hat tip to Alex Juel for doing a lot of the leg-work on this thing.

Content audit strategies for common scenarios

Like the automated strategy formulas for each URL in the spreadsheet above, this tool is meant to help those new to content audits when choosing an overall strategy for the project as a whole. Access it online any time at the link above. The “toolkit” includes a printable PDF version.

Example stakeholder reports

Audits mean nothing if they don’t result in actionable insights and, ultimately, implementation of your recommendations. One way to make your insights more actionable is to break them up by stakeholder. As an eCommerce SEO, you can provide added value to the rest of the company by producing reports for eCommerce directors, marketing executives, merchandisers, copywriters, developers, and more.

eCommerce content audit white paper

This is an overview of the concept with several more case studies. It would make a great introduction for marketing executives and others without a lot of technical SEO experience.

Instructions for making the most out of the toolkit

It’s no good to get a bunch of files if you don’t know what to do with them.

Other useful resources

- How to Do Content Audits (with a link to a step-by-step on Moz)

- Ian Lurie’s Guide to Creating Content Strategy in “Only” 652 Steps

- How to Conduct a Content Audit by Rebecca Lieb on Marketing Land

- Screaming Frog (crawler)

- Botify (crawl and analysis)

- Xenu (crawler)

- URL Profiler (crawler, API metrics compiler, awesome tool)

- More Things to Consider for eCommerce Catalog Content Audits

This post was too long; I didn’t read it (TL;DR)

The two most important takeaways are:

1. Don’t be afraid to make pruning choices when the metrics back up your strategy.

When you perform a content audit, it allows you to make recommendations based on real data that can easily be shared with decision makers. You can also use case studies and small-scale tests to bolster your case.

Don’t be afraid to take out a good portion of the site if the metrics tell the story of useless URL-bloat.

Don’t be afraid to take out a good portion of the site if the metrics tell the story of useless URL-bloat.

2. Pruning isn’t synonymous with deleting.

You can (temporarily) remove URLs from the index while managing the copywriting process, returning them to the index as they are brought up to standards.

That’s about all I have to say about pruning eCommerce sites at the moment. Have you done a content audit yet? How did it go? What were your sticking points? Any major successes or failures?

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!

Continue reading →